January 9, 2026

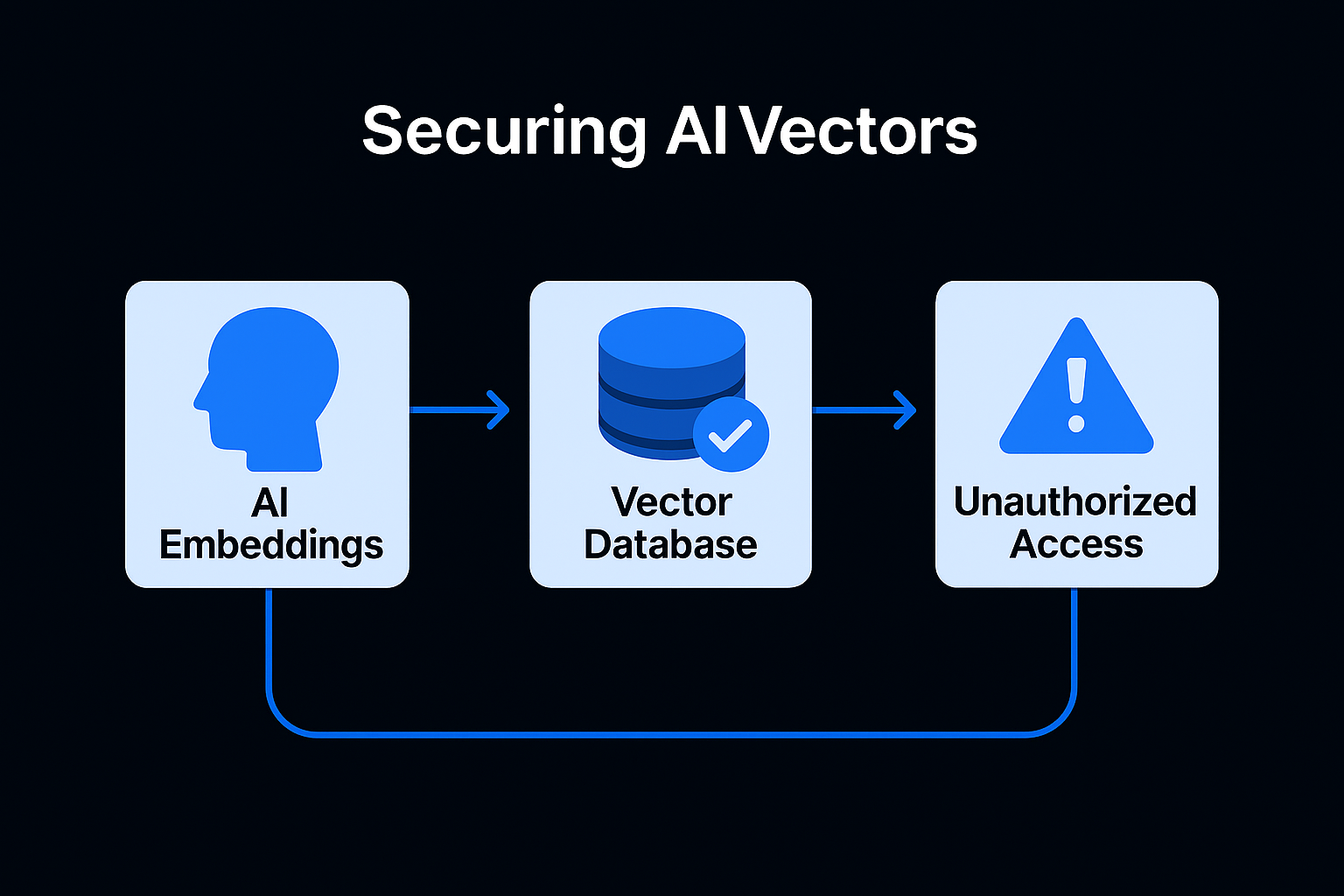

In our journey through the AI Security Framework, we’ve secured the prompts, the agents, and the supply chain. But in 2026, the most valuable—and vulnerable—asset in your GenAI stack isn't the model itself; it’s the Vector Database.

Think of the LLM as the "brain" and the Vector Database as the "long-term memory." Through Retrieval-Augmented Generation (RAG), the AI "remembers" your company’s PDFs, emails, and database records by converting them into mathematical coordinates called embeddings.

LLM08: Vector and Embedding Weaknesses describes the risks that emerge when this memory is manipulated, stolen, or improperly accessed. If an attacker compromises your vectors, they don't just see your data—they control what your AI "knows."

A common misconception in early AI development was that embeddings were "safe" because they are just lists of numbers (vectors). Surely a human can't read a list of 1,536 floating-point decimals and see a CEO’s private memo, right?

Wrong. By 2026, Embedding Inversion Attacks have become highly sophisticated. Using "surrogate models," attackers can mathematically reverse-engineer a vector back into its original text with over 90% accuracy. If your vector database is exfiltrated, your "encrypted" data is effectively plain text.

Unlike Data Poisoning, which happens during training, Vector Poisoning happens in your live RAG pipeline.

Many RAG systems are built with a "flat" architecture where the AI has a single API key to the vector store.

Attackers can make imperceptible changes to a query—adding a few "hidden" characters or noise—that shifts the vector just enough to bypass a security filter while still retrieving the malicious content they want.

To protect your AI’s memory, you must move beyond simple storage and toward Identity-Aware Retrieval.

In 2026, you cannot rely on the AI to "know" who is allowed to see what. You must enforce security at the database level.

search(query_vector, filter={"allowed_users": "bob_123"}). The database will only "see" and "remember" the chunks Bob is authorized to view.For maximum security, use databases that support Row-Level Security (RLS) for vectors (like Postgres with pgvector or specialized enterprise stores). This ensures that permissions are checked as part of the database's internal query execution, rather than as a "post-filtering" step in your code.

Monitor your vector database for unusual retrieval patterns.

Treat your vector store with the same rigor as your primary SQL database. Use AES-256 encryption at rest and ensure your embedding provider (e.g., OpenAI, Azure, or an on-prem model) uses secure, encrypted tunnels for the data in transit.

Security Feature Traditional SQL Vector Database (2026)

Data Format Clear Text / Encrypted High-Dimensional Embeddings

Search Logic Exact Match / Key-based Semantic Similarity (Approximate)

Access Control Roles/Grants (Strong) Metadata Filtering (Emerging)

Main Threat. SQL Injection. Vector Poisoning / Inversion

Your vector database is the source of truth for your AI. If that truth is poisoned or leaked, the "intelligence" of your model becomes a liability. By implementing Permission-Aware RAG and treating vectors as identifiable data, you ensure your AI’s memory remains a private, trusted asset.

Securing the memory is vital, but we must also address what happens when the AI is simply wrong or deceptive. This leads us to one of the most visible risks in modern AI.