January 4, 2026

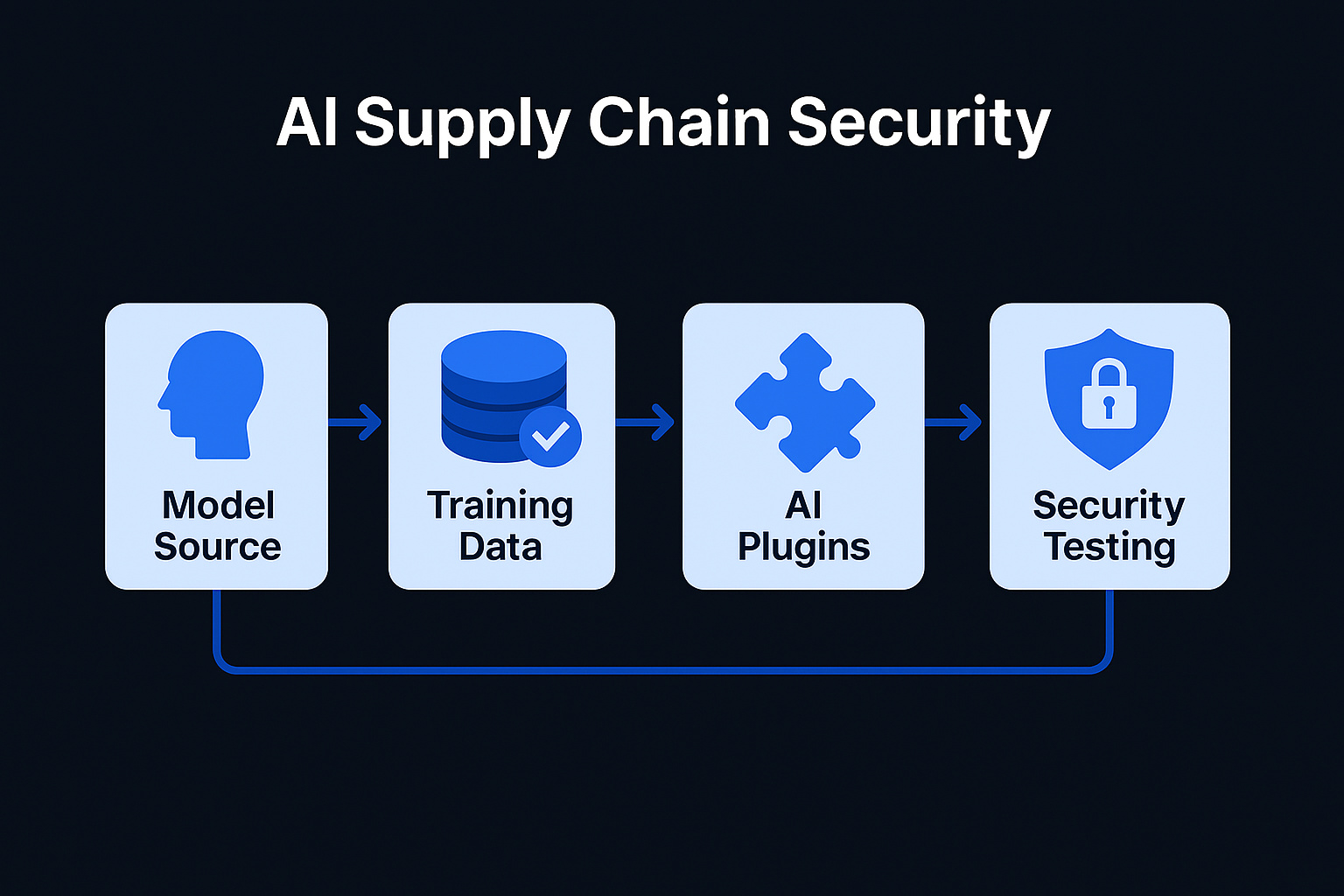

In the traditional software world, we’ve spent decades learning how to secure the supply chain. We use tools like Software Bill of Materials (SBOM) to track every library and dependency. But in 2026, the AI stack has introduced a whole new set of "black box" dependencies: pre-trained models, massive datasets, and autonomous plugins.

LLM03: Supply Chain Vulnerabilities is currently one of the most overlooked risks in the AI Security Framework. If you are building on a foundation model that has been "backdoored" or using a plugin that hasn't been audited, your entire security posture is built on sand.

Securing an AI application isn't just about your code; it's about the integrity of the third-party assets you consume.

Most companies don't train models from scratch; they download base models from hubs like Hugging Face or use APIs from providers like OpenAI or Anthropic.

If you fine-tune your model on external datasets, you are at the mercy of that data’s creator.

To give LLMs "agency," we connect them to plugins (e.g., a "Calendar Plugin" or a "SQL execution tool").

To mitigate these risks, organizations must move away from "implicit trust" and toward a Verifiable AI Supply Chain.

Just as we use SBOMs for software, we now require an AIBOM. This document should detail:

Never "pip install" or download a model without verifying its integrity.

If your AI uses a plugin to execute tasks, treat that plugin as a high-risk dependency.

If you use a closed-source API (like GPT-4o or Claude 3.5), you don't control the supply chain—the provider does.

Component Risk Level Primary Defense

Foundation Models High Hashing, Signing, & AIBOMs

Training Datasets Medium Data Provenance & Anomaly Detection

AI Plugins Very High Sandboxing & Least Privilege Access

Third-Party APIs Medium Continuous Regression & Safety Testing

Before moving your AI application to production, ensure your security team can answer "Yes" to the following:

The AI supply chain is complex, involving millions of lines of code and billions of parameters. You cannot secure what you do not track. By implementing AIBOMs and strict plugin sandboxing, you turn a "black box" into a transparent, secure foundation.

Securing your supply chain is a non-negotiable part of the AI Security Framework. Once your components are vetted, the next step is ensuring the data used to train them hasn't been compromised.